CODECHECK tackles one of the main challenges of computational research by supporting codecheckers with a workflow, guidelines and tools to evaluate computer programs underlying scientific papers. The independent time-stamped runs conducted by codecheckers will award a “certificate of executable computation” and increase availability, discovery and reproducibility of crucial artefacts for computational sciences. See the CODECHECK paper for a full description of problems, solutions, and goals and take a look at the GitHub organisation for examples of codechecks and the CODECHECK infrastructure and tools.

CODECHECK is based on five principles which are described in detail in the project description and the paper.

- Codecheckers record but don’t investigate or fix.

- Communication between humans is key.

- Credit is given to codecheckers.

- Workflows must be auditable.

- Open by default and transitional by disposition.

The principles can be implemented in different processes, one of which is the CODECHECK community workflow. If you want to get involved as a codechecker in the community, or if you want to apply the CODECHECK principles in your journal or conference, please take a look at the Get Involved page.

To stay in touch with the project, follow us on social media at https://fediscience.org/@codecheck.

News

2026-03 | Love Replications Week 2026 ❤️

CODECHECK is honoured to be part of the excellent programme of the first Love Replications Week of 2026. Find the events curated by the great folks from FORRT and MüCOS at 🔗 https://forrt.org/LoveReplicationsWeek/. Don’t miss to scroll down to the full programm and find interesting and educational events. On Tuesday, Daniel presented CODECHECK - find his slides on “How to get your reproducibility certificate with CODECHECK” on Zenodo: https://doi.org/10.5281/zenodo.18849086.

2025-08 | Institutional CODECHECKs launched in the Netherlands 🏛️

Two organisations in the Netherlands recently started offering CODECHECKs as an institutional service: the TU Delft Digital Competence Centre (DCC) together with 4TU.ResearchData and the Amsterdam University Medical Center (AUMC). Find out more on the new page for institutional CODECHECKs at https://codecheck.org.uk/institutions and read the blog post “CODECHECK in Practice: How TU Delft and 4TU.ResearchData Are Making Reproducibility Happen” (see also on LinkedIn) by the DCC team and the report “Computational reproducibility at Amsterdam UMC” by the AUMC team, which includes experiences from their first few checks and their future plans.

2025-07 | CODECHECK mentioned in OSIRIS report “10 Tales of Reproducibility” 🐉

The OSIRIS project (Open Science to Increase Reproducibility in Science) is dedicated to reforming the Research and Innovation system, enhancing global acceptance, practice, and recognition of reproducibility in scientific research. In their report “Ten Tales of Reproducibility”, they highlight the CODECHECKs at Amsterdam UMC as a community-driven effort to make reproducible research standar practice. They also shared the “tale 3” on here on LinkedIn.

Read the full report.

2025-06 | First check for OSF’s Lifecycle Journal ♻️

CODECHECK is one of the Evaluation Services or a new journal started by the Open Science Framework (OSF), the Diamon Open Access Journal Lifecycle Journal. We are excited to join this great initiative for reimagining scholarly publishing for the initial period of 2025 and look forward to working with the Lifecycle Journal team and the community. If you work in one of the fields covered by the journal, please consider submitting your work to the journal and sharing a reproducible workflow with it!

Find all CODECHECKs for the Lifecycle Journal at https://codecheck.org.uk/register/venues/journals/lifecycle_journal/.

2025-05 | CHECK-NL project completed 🇳🇱

The Codechecking-NL project team published a final blog post and presents their experiences and the future of the Dutch community - read Advancing reproducibility and Open Science one workshop at a time - community-building in the Netherlands.

The group also shares their event recipe for running a local CODECHECK event on Zenodo and on the website here. No excuses anymore, you can now run your own CODECHECK event!

2025-04 | CHECK-PUB project started 🚀

TU Delft Library supports the development of a new building block for the CODECHECK initiative: a plugin for Open Journal Systems (OJS) to support the CODECHECK process in journals. In the CHECK-PUB project, we will develop a prototype of the plugin and look for journals to collaborate on real-world test scenarios. 👉 Learn more about the project at codecheck.org.uk/pub/.

2025-03 | Tutorial at AGILE 2025 conference 🧑🎓

On Tuesday, June 10, 2025 a half-day workshop and tutorial “Open and reproducible research best practices: Codechecks, AGILE repro reviews, and reprohacks for your research” will take place in Dresden, Germany, as part of the AGILE 2025 conference. The event includes hands-on work in small groups to train codecheckers and is a continuation of the close collaboration between the Reproducible AGILE initiative and CODECHECK, more specifically the CHECK-NL project.

Learn more at the event website: https://codecheck.org.uk/nl/agilegis-2025.html

2025-01 | Blog post by Eduard Klapwijk on author’s perspective 🖊️

What is the experience for authors in a CODECHECK? Eduard Klapwijk (https://orcid.org/0000-0002-8936-0365), research data steward at Erasmus School of Social and Behavioural Sciences (ESSB), Erasmus University Rotterdam, and eScience fellow answers it from his perspective. His experiences feed into the project Implementing institutional reproducibility checks to promote good computational practices which he pursues as part of his fellowship - see the ESSB Repro Checks website for more information. He describes his research workflow, the changes he made in the context of the community CODECHECK, and shares useful reflections for authors and the CODECHECK initiative.

Read the blog on the ESSB Repro Checks website and on the Netherlands eScience Center Medium page and check out the CODECHECK Certificate 2024-005 by Lukas Röseler.

2024-11 | CODECHECK at ASSA summer school in Eindhoven 🔉

On the last day of the 🍁 Autumn School Series in Acoustics at Eindhoven University of Technology, the autum school team of Maarten Hornikx and Huiqing Wang organized a track on open research software in the computational acoustics.

This day is part of the NWO (Dutch Research Council) open science fund project Unlocking the impact potential of research software in acoustics, driven by the TU/e Building Acoustics team, which targets to to foster a culture change regarding good practices in open science regarding the development and dissemination of research software in acoustics. After a successful workshop in Nantes, the team now targeted early career researchers.

The day kicked off with talks about the potential and future of open research software, best practices in open research software, and instructions and guidelines for acceleration of sharing research software, by Maarten and Huiqing.

One particular aspect of research software is related to reproducible research. In the afternoon, participants carried out a CODECHECK process, targeting the independent execution of computations underlying research articles. While the purpose of the afternoon was to experience running open research software developed by colleagues and getting experience with the process of codecheck, we have had great discussions on what we need in our community to bring research software further for the sake of quality, reproducibility, collaboration and impact of research.

Outcomes of this workshop and the previous workshop will be shared, such that we indeed can take the right step forward in our community!

🙏🏻 Thanks to Frank Ostermann, Daniel Nüst and Daniela Gawehns for the support from CODECHECK!

(This news item was previously published on LinkedIn.)

2024-04 | CODECHECK wins Team Credibility Prize 🏆

The British Neuroscience Association (BNA) have awarded the 2024 Team Credibility Prize to CODECHECK for our work on reproducibility. Further details.

2024-03 | Dutch Research Council (NWO) supports CODECHECK 🇳🇱

We are happy to announce a one-year grant from the Dutch Research Council (NWO) to start various CODECHECK activities in the Netherlands. The project is led by Frank Ostermann (University of Twente) with colleagues from Delft and Groningen. Further details.

2023-09 | CODECHECK and TU Delft Hackathon 💻

TU Delft and CODECHECK run a hackathon on 18th September 2023.

👉 Read the report in the TU Delft Open Publishing Blog: https://openpublishing.tudl.tudelft.nl/tu-delft-codecheck-hackathon-some-perspectives/ 👈

📓 The shared notes are available on the following pad: https://hackmd.io/77AIvx0qRRWGvo1D2k_t8A

The hybrid event is jointly organised by TU Delft Open Science, TU Delft OPEN Publishing, the CODECHECK team, and friends. The workshop features live codechecking of workflows by researchers from TU Delft and is both suitable for hands-on participation, observing, and discussing. The goal is to explore building a local CODECHECK community whose members may check each others code, e.g., before a preprint is published or a manuscript is submitted.

2022-11 | Introduction to CODECHECK Video 📺

Follow us on YouTube: https://www.youtube.com/@cdchck

2022-11 | Panel participation in “How to build, grow, and sustain reproducibility or open science initiatives” 🌱

CODECHECK team member Daniel Nüst had the honour to participate in a panel discussion on November 23rd 2022. The German Reproducibility Network (GRN) organised the two-day event “How to build, grow, and sustain reproducibility or open science initiatives: A virtual brainstorming event”. Learn more about the evenet and this asynchronous unconference-style meeting format on the website. The event was accompanied by a live and interactive and the panel discussion on the same topic. The panelists were representatives of the German Reproducibility Network (GRN) and actively involved in initiatives that focus on open science, open code, guidelines and research practices, as well as quality management, among other things.

Daniel thanks the other panelists for the interesting conversation: Carsten Kettner, Céline Heinl, Clarissa F. D. Carneiro, and Maximilian Frank. We also thank the organization team from GRN steering group (Antonia Schrader, Tina Lonsdorf, Gordon Feld) and moderator Tracey Weissgerber from BIH QUEST Center @ Charité Berlin.

2022-09 | CODECHECK Hackathon @ OpenGeoHub Summer School 🏫

Markus Konkol (https://github.com/MarkusKonk, https://twitter.com/MarkusKonkol), research software engineer at 52°North and codechecker, organised a CODECHECK hackathon as part of the OpenGeoHub summer school. He reports on his experiences in a blog post in the 52°North blog at https://blog.52north.org/2022/09/16/opengeohub-summer-school-facilitating-reproducibility-using-codecheck/. It’s great to see that codechecking is a suitable evening pastime activity and that participants took some nice learnigns away from the experience of codechecking. Check out the quotes in the blog post!

Thanks, Markus, for spreading the word about CODECHECK and for introducing more developers and software-developing researchers of the need for their expertise during peer review.

2022-06 | AGILE Reproducibility Review 2022 ✅

The collaboration between CODECHECK and the AGILE conference series continues! In 2022, the AGILE conference’s reproducibility committee conducted 16 reproductions of conference full papers. Take a look at the slides presented at the final conference day here. The reproducibility review took place after the scientific review. The reproducibility reports, the AGILE conference’s are published on OSF at https://osf.io/r5w79/ and listed in the CODECHECK register.

Learn more about the Reproducible AGILE initiative at https://reproducible-agile.github.io/.

2022-04 | CODECHECK talks 💬

The CODECHECK team is grateful about the continued interest from the research community on the topic of evaluating code and workflows as part of scholarly communication and peer review.

Stephen gave a talk at the 2022 Toronto Workshop on Reproducibility organised by Rohan Alexander. You can find the slides online and also watch the recording on YouTube - very worth a look because of the great Q&A at the end!

Stephen presented CODECHECK: An Open Science initiative for the independent execution of computations underlying research articles during peer review to improve reproducibility (slides) in May 2021 at the Reproducibility Tea Southhampton.

Daniel gave the keynote at the Collaborations Workshop 2022 (CW22) on April 4, 2022, organised by the Software Sustainability Institute (SSI), UK entitled Code execution during peer review (slides, PDF, video) and presented CODECHECK as well as the partnering initiative Reproducible AGILE.

2021-07 | F1000Research paper on CODECHECK published after reviews 📃

The F1000Research preprint presented below has passed peer review and is now published in version 2. We are grateful for the two reviewers, Nicolas P. Rougier and Sarah Gibson, who gave helpful feedback and asked good questions that helped to improve the paper.

Nüst D and Eglen SJ. CODECHECK: an Open Science initiative for the independent execution of computations underlying research articles during peer review to improve reproducibility [version 2; peer review: 2 approved]. F1000Research 2021, 10:253 (https://doi.org/10.12688/f1000research.51738.2)

The F1000 blog also features the article with a little Q&A: https://blog.f1000.com/2021/09/27/codecheck. Thanks Jessica for making that happen!

2021-04 | CODECHECK @ ITC ✅

CODECHECK supporter Markus Konkol has built a CODECHECK process for all researchers at the University of Twente’s Faculty of Geo-Information Science and Earth Observation (ITC). He offers his expertise to codecheck manuscripts and underlying source code and data before submission or preprint publication, so even if the information is still not publicly shared. His reports will then go public on Zenodo when the paper comes out, just like a regular CODECHECK, and can support the article’s claims. If timed right, authors can even link to the certificate before submission. This is a great service for ITC researchers and their reviewers and readers!

Learn more at https://www.itc.nl/research/open-science/codecheck/ and see an example at https://doi.org/10.5281/zenodo.5106408.

2021-03 | F1000Research preprint 📄

A preprint about CODECHECK was published at F1000Research and is now subject to open peer review. It presents the codechecking workflow, describes involved roles and stakeholders, presents the 25 codechecks conducted up to today, and details the experiences and tools that underpin the CODECHECK initiative. We welcome your comments!

Nüst D and Eglen SJ. CODECHECK: an Open Science initiative for the independent execution of computations underlying research articles during peer review to improve reproducibility [version 1; peer review: awaiting peer review]. F1000Research 2021, 10:253 (https://doi.org/10.12688/f1000research.51738.1)

2020-06 | Nature News article 📰

A Nature News article by Dalmeet Singh Chawla discussed the recent CODECHECK #2020-010 of a simulation study, including some quotes by CODECHECK Co-PI Stephen J. Eglen and fellow Open Science and Open Software experts Neil Chue Hong (Software Sustainability Institute, UK) and Konrad Hinsen (CNRS, France).

Singh Chawla, D. (2020). Critiqued coronavirus simulation gets thumbs up from code-checking efforts. Nature. https://doi.org/10.1038/d41586-020-01685-y

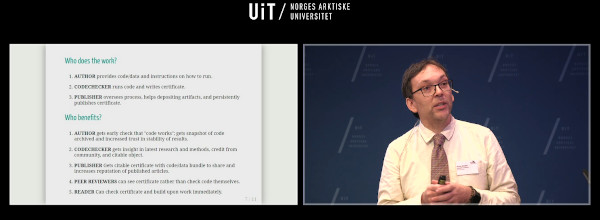

2019-11 | MUNIN conference presentation 💯

Stephen Eglen presented CODECHECK at The 14th Munin Conference on Scholarly Publishing 2019 with the submission “CODECHECK: An open-science initiative to facilitate sharing of computer programs and results presented in scientific publications”, see https://doi.org/10.7557/5.4910.

Take a look at the poster and the slides.